Abstract

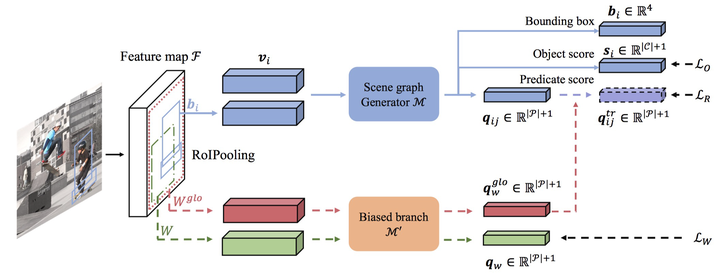

A scene graph is the structural representation of a scene, consisting of the objects as nodes and relationships between any two objects as edges. The scene graph has been widely adopted in high-level vision language and reasoning applications. Therefore, scene graph generation has been a popular topic in recent years. However, it has suffered from the bias brought by the long-tailed distribution among the relationships. The scene graph generator prefers to predict the head predicates which are ambiguous and less precise. It makes the scene graph convey less information and degenerate into the stacking of objects, which restricts other applications from reasoning on the graph. In order to make the generator predict more diverse relationships and give a precise scene graph, we propose a method called Additional Biased Predictor (ABP) assisted balanced learning. It introduces an extra relationship prediction branch which is especially affected by the bias to make the generator pay more attention on tail predicates rather than tail ones. Compared to the scene graph generator which predicts relationships between any object pairs of interest, the biased branch predicts the relationships in the image without being assigned a certain object pair of interest, which is more concise. In order to train this biased branch, we construct the region-level relationship annotation using the instance-level relationship annotation automatically. Extensive experiments on the popular datasets, i.e., Visual Genome, VRD and OpenImages, show that ABP is effective on different scene graph generator, and makes the generator predict more diverse and accurate relationships and provide a more balanced and practical scene graph.